With growing power and heat loads, and the need to minimise energy use, data centres are a challenge to the building services designer. Nick Vaney recommends a holistic approach

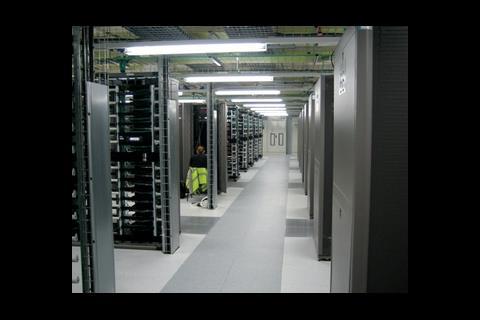

As computer technology continues to pack more processing punch into ever smaller spaces, data centre operators are optimising space by fitting greater numbers of smaller servers into the same area.

The reduction in server sizes is not, however, being matched by a reduction in power, which means heat densities are increasing. Consequently, the power requirements of data centres are increasing – up to 30MWe is not uncommon – and heat loads are increasing in parallel.

The challenge, therefore, is how to deal with these rising heat loads while reducing (or at least minimising) energy consumption and associated carbon emissions. The best way forward is to take a fresh look at established practices and question the validity of each in the light of technology improvements in modern data centres.

The obvious starting point is with the general design conditions. Traditionally, these would be a maximum temperature of 22ºC at 50% relative humidity, averaged across the space. These figures, however, were based on the performance criteria of older servers; modern servers can function well at higher temperatures.

The common argument against increasing the temperature, though, is that this will be closer to the critical temperature that would lead to servers overheating. In fact, with typical heat loads of about 1500W/m2 in modern server rooms, overheating will occur rapidly if the cooling system fails, even from 22ºC.

I would, therefore, argue for the appropriate level of resilience, so that failure of one or two computer room air-conditioning (CRAC) units will not lead to design temperatures being exceeded. This corresponds to more data centres now adopting tier 3 (N+1) or tier 4 (2N or 2N+1) resilience. In line with the possibility of designing for a higher room temperature, it makes sense to take a fresh look at chilled water temperatures. Frequently, the 6ºC flow/12ºC return temperatures used in general buildings are transferred across to data centres, where they are less appropriate.

These low chilled-water temperatures result in unwanted dehumidification of the data hall environment. In this situation the solution often is to rehumidify the environment, but this uses valuable energy and effectively treats the symptom rather than the cause.

Experience shows that a data hall can easily be maintained at 22ºC with higher chilled-water temperatures of, say, 10ºC flow and 16ºC return. This ensures there is no dehumidification and increases the opportunity for free cooling – typically about 2250 additional hours of free cooling per year in the UK. If data hall temperatures are allowed to rise to, say, 24ºC or 26ºC, there is scope for even more free cooling and energy saving.

In some situations, it is possible to take advantage of the higher return water temperatures by installing an additional, small heat recovery chiller to operate as a heat pump unit, recovering heat from the water. This can be used to generate hot water at about 50ºC for space heating, heating of fresh air and pre-heating of domestic hot water.

Higher air volumes

Using higher chilled-water temperatures clearly requires higher supply air volumes, so there will be some increase in fan power consumption, but this is more than compensated for by the savings derived from a more efficient chilled- water system. Where space is available, use of bespoke CRAC units with large fans can reduce fan power consumption even further.

Another benefit is that, as more air is moving around the space, there is less chance of hot spots developing. Also, more air delivered to the cabinets reduces the risk of them drawing in recirculated warm air from the room, a problem when air volumes are minimised.

In all cases, variable speed fans can provide further savings. For instance, if a server room needs eight CRAC units to meet the cooling load, it will normally be fitted with 10 so that two can be taken out of commission for maintenance. Most of the time, though, all 10 units will be running at part-capacity. And because of the cuboidal relationship between speed and power in a fan, a speed reduction of just 20% will reduce power consumption by nearly 50%.

With fixed speed systems and three-port valves, the full volume of water must be pumped to all the CRAC units regardless of cooling demands. So with typical standby quantities of CRAC units in the order of 20-25%, pump and pipework systems then have to be oversized by this same percentage, adding to the capital cost of the project.

Applying the above principles to pumps, using two-port valves at the CRAC units to respond to the load demand and inverters on the pumps to respond to the changes in flow rate requirements can result in energy savings on the pumping system of about 30-50%.

Humidification

Another significant energy consumer is humidification of air in winter, often using electrode boiler humidifiers located in the CRAC units. As the only real latent gain or loss to the data hall is the fresh air supply, it makes sense just to treat the fresh air directly using a cold water jet spray humidification system – giving considerable energy savings.

Water-cooled chillers

Given that data centres already operate with stringent maintenance regimes, they are ideal for exploiting the efficiency benefits of water- cooled chillers and cooling towers. These benefits are highly significant – a water-cooled chiller in a well-designed system can offer an average annual CoP of 12, compared with a CoP of about four for an air-cooled chiller.

In addition, if large water-cooled centrifugal chillers are used, there is only one compressor and one fan to maintain per chiller/cooling tower arrangement, compared with six compressors and 16 fans on an air-cooled chiller of comparable cooling capacity.

Free cooling is easily applied to the water-cooled chiller arrangement with the correct controls and hydraulic design and provides even higher savings than with air-cooled chillers as cooling is based on the ambient wet bulb temperature, which is always colder than the dry bulb temperature.

There are other, more radical ways of saving energy, using renewable energy sources such as photovoltaic cells or wind turbines, but current payback periods tend to exceed the operating life of a data centre. There are also further considerations regarding how to ensure that these power sources meet the resilience requirements of a data centre.

There is an accepted principle in the computing industry, known as Moore’s law, which says that the number of transistors that can be placed inexpensively on an integrated circuit is increasing exponentially, doubling every two years. Over the decades we have seen this theory become reality, and the trend is expected to continue for at least another decade.

In the light of Moore’s law, it is clear that designing cooling systems for data centres will be ever more challenging. Every data centre has different requirements so there can be no off-the-shelf solution to suit all. But adopting an open-minded, holistic approach makes it possible to meet these challenges with effective, tried-and-tested engineering solutions.

Source

ºÃÉ«ÏÈÉúTV Sustainable Design

Postscript

Nick Vaney is a director of Red Engineering Design, which was voted Consultancy of the Year at the 2008 ºÃÉ«ÏÈÉúTV Services Awards

Original print headline 'Powering down for data protection'

2 Readers' comments