The data centre sector remains highly active and market growth has continued throughout the global recession. We examine the latest trends in low-energy data centre design, procurement and construction

01/ Introduction

Global spending on IT is somewhere in the region of $3.7 trillion (£2.3 trillion), and a high proportion of this spend is on servers and storage which need to be housed in specialist data centre facilities.

Data centres are essentially warehouses or industrial buildings that provide a highly secure and resilient operation environment for IT equipment, without drawing undue attention to the highly sensitive nature of their contents. They may be, above all, highly functional buildings, but their design is evolving quickly, fuelled by the ever increasing need for efficiencies and the take-up of cloud-based computing solutions.

A further shift in data centre procurement has been the focus on value rather than just cost, as clients strive to future-proof schemes. One area of concern for developers and investors is the perceived rate of obsolescence of equipment. But that risk can be managed by only buying the plant for the tenant when it is needed. The smart investor will procure the frame of the space, thus removing planning/permit and construction risk, then fit out with the latest available technology to suit the tenant, at the point he signs for the space.

As the sector matures we are now seeing a less opaque market. Previously customers and tenants have found navigating through this market almost impossible, but we are increasingly seeing deals based on a clearer idea of the total cost of occupancy rather than the outgoings as separate line items. Institutions and funds are much more inclined to invest in a sector when there is market visibility, with fewer hidden costs. This all helps to mitigate risk. This in turns means there will likely be new entrants in the market in the coming years.

02/ Demand for Data Centres

Demand for data centres continues to be driven by three groups of clients: owner-occupiers such as banks; tenants such as internet operations who take space in collocation centres; and managed service companies who need large scale IT capability to run large programmes such as the NHS IT project. This demand is currently met by owner-occupier “build to suit” and co-location projects.

Demand for space has been affected by the economic downturn and this has resulted in a shift-change in the scale of speculative investment in the sector. As with many aspects of the recession, investor appetite to gamble on the timing of the next period of rapid expansion in the sector has been mixed but there have still been significant investments in very large schemes in the UK and Europe.

Marketing initiatives launched by some EU regions to attract data centre investment continue, with Google reportedly investing $1bn in Finland alone. Industries and businesses’ increased requirements for unified communications platforms, new media services and social media continue to drive significant expansion. As use of IT continues to rise, the real constraint on growth and principal source of risk will be the availability of power from renewable sources and the ability of a data centre operator to use it effectively.

As the institutional investment market becomes better placed to fund data centre schemes, greater competition will emerge. The industry standard is for rentals to be expressed in kW pa. Rentals vary enormously depending on the location, length of commitment by the tenant and the covenant strength, but generally they start between £1,000 and £2,000 k/W pa for wholesale net technical space.

03/ Design Considerations

The key factor in data centre planning and design is ensuring that the reliability and availability of the engineering services is as high as possible (see section 5 below). The accepted classification of data centre resilience is the four-tier system developed by the Uptime Institute, with tier 4 guaranteeing the highest level of availability.

However, in addition to securing resilient power and IT connectivity, the successful delivery of data centres depends on the following design principles:

- Simplicity Human error during operation is a major source of downtime risk, so the use of simple solutions - albeit as part of very large systems - is the preferred design strategy. This is particularly the case with the configuration of back-up capacity, where the drive to reduce the investment in central plant can result in highly complex controls and distribution.

- Flexibility With many facilities housed in warehouses and industrial buildings, developers enjoy high levels of flexibility with regard to

- layout and floor loadings. It is important to note that data centres operate on short timeframes - servers are replaced in a three-year cycle, so a building will accommodate 10 or more technology upgrades during its operational life. The flexibility to accommodate change without major work to the physical or IT infrastructure is very valuable.

- Scalability Many centres will not initially run at full capacity. A centre must be able to accommodate sustained growth, making best use of plant capacity, without any interruption to services. This requirement has implications for the design of all aspects of the building, building services and information architecture and most recently power useage effectiveness (PUE) at low load.

- Modularity A modular approach is focused mostly on main plant, providing the overall capacity required in a number of smaller units. This means very complex systems can be organised to allow scalability.

- Whole-life costs An understanding of the total cost of solutions is very valuable, as IT system managers often impose very arduous availability requirements without understanding the cost implications, which can vary considerably over the life of a facility.

04/ Cost drivers

Data centres have a unique set of cost drivers. Conventional measures of efficiency used in commercial buildings do not apply when occupancy levels are 100 times lower than a conventional office and cooling loads 10 times higher. The key cost drivers are:

- Extent of technical area This is the conditioned space known as data halls or “white space”.

- Power and heat load density This is determined by planned server density. Average loads in the most recent data centres range from 1,000 to 1,500W/m2, often with additional high density provisions to accommodate cabinets with cooling loads of 10kW or more. By contrast, power loads in conventional offices are no higher than 25-35W/m2.

- Resilience Typically this will be defined by the tier rating. The selected tier rating will have significant impact on the cost of the engineering services and associated accommodation requirements to house necessary plant and, in the case of tier 4, fully diverse service routes for alternative services and IT infrastructure. There is a significant step between tiers 1-2 and tiers 3-4, where fully diverse services and IT paths are introduced.

- Energy efficiency This is normally measured by the power usage effectiveness metric (PUE), which is a ratio of the total input power input to the data centre divided by that used by the IT equipment. Emphasis on efficiency will often have an effect on capital cost and the selection of solutions and plant and equipment needs to be carefully considered against actual anticipated usage. Data centre energy use is very high, so energy efficiency payback periods can be relatively short compared with other users.

- Control and monitoring Highly resilient control and monitoring allows the management and operations team to manage the facility reliably. These systems might include industrial control and building management systems, plus ancillary systems for generators and so on. In addition there has been a significant increase in the monitoring and management of power and cooling. This information can be used for reporting and analysis and ultimately to improve efficiency.

- Balance of space Plant space, storage and IT assembly areas, administration accommodation and so on are relatively low impact cost drivers, as building costs often account for no more than 20% of overall costs. However, adequate and well planned space - particular storage and other technical space - is important to effective, long-term operation.

- Expansion strategy This will determine the initial capital spend on base infrastructure such as power supply (typically high voltage), main cooling plant, distribution and so on. The strategy will also have an impact on running costs. While some modern equipment will operate more efficiently at less than its maximum output, operation of too much plant at very low loads is likely to be significantly inefficient.Modular approaches to the provision of chillers; room cooling, standby generation and UPS provide a high level of scalability and potential for lower cost diversity of supply. Some systems however, such as HV switch gear, primary cooling distribution and so on, are more practical and economic to provide as part of the first phase of development.

05/ Resilience and availability

As all aspects of life become more dependent on continuous, real-time IT processing, user requirements for guaranteed availability have increased.

At one end of the scale, financial transactions, access to health records and military security applications require absolute security of operation, a requirement that come with significant capital and running cost implications. However, most processing has less critical availability requirements and can be accommodated in centres which provide a lower level of resilience.

As previously mentioned, the accepted definition of data centre availability is the Uptime Institute’s four-tier system. Depending on the level of availability guaranteed, data centre developers and operators have to provide an increasing degree of system back-up. For example, a tier 4 data centre will be 50% larger and three times more expensive per unit of processing space than an equivalent tier 2 data centre.

Systems that are affected by redundancy/resilience requirements include:

- Physical security The minimisation of risks associated with the location of the building and the provision of physical security to withstand disaster events or to prevent unauthorised access.

- Power supplies Very secure facilities will require two separate HV supplies, each rated to the maximum load. However, as HV capacity is restricted in many locations, a fully diversified supply is costly and difficult to obtain and has opportunity availability cost implications for overall grid capacity. For tier 1 and tier 2 data centres, a single HV feed will be used.

- Standby plant For tier 1 data centres a single generator “n” may be sufficient and for tier 2 this would be expanded to two generators, or n+1 depending on load/rating. In tier 3 and tier 4 data centres, the generation plant is deemed to be the primary source of power with the mains power being an “economic” alternative power source. The higher tier ratings demand duplicate plant to meet concurrent maintenance and fault tolerance criteria.

- Central plant Plant will be provided with a varying degree of back-up to provide for component failure or cover for maintenance and replacement. On most data centres designed to tier 3 or below, an n+1 redundancy strategy is followed whereby one unit of plant is configured to provide overall standby capacity for the system. The cost of n+1 resilience is influenced by the degree of modularity in the design. For example, 1,500kW of chiller load provided by 3 x 500kW chillers will typically require one extra 500kW chiller to provide n+1 backup. Depending on the degree of modularity, n+1 increases plant capacity by 25-35%. For tier 4 data centres, the provision of 2n plant is typically required, which increases capacity by 100%.

- LV switchgear and standby power Power supplies to all technical areas are provided on a diverse basis. This avoids single points of failure and provides capacity for maintenance and replacement. The point at which diversity is provided has a key impact on project cost. Tier 3 and 4 solutions require duplicate systems paths feeding down to practical data unit (PDU) level.

- Final distribution Power supply and cooling provision to technical areas are the final links in the chain. Individual equipment racks will have two power supplies. Similarly cooling solutions can be required to be connected to multiple cooling circuits and terminal units. For tier 1 and 2 solutions the overall number of terminal units will be calculated to provide sufficient capacity at peak cooling load even if units are being maintained or have failed. For tier 3 and 4 solutions duplicate capacity is required in the cooling connections to the units as well as the units themselves. Where evaporation-based cooling solutions are adopted, water supply is also a key factor in system resilience.

06/ Sustainability

Electricity consumption by data centres represents between 1.5% and 2% of total global energy use. This represents a huge carbon footprint and a major draw on scarce generation capacity.

Despite the obvious benefits of a green strategy, IT clients have tended to focus on their absolute requirements for performance and security. But as power costs increase, the agenda is slowly changing. There are a number of strands to data centre energy reduction and sustainability.

These include:

- Optimisation of server performance This is concerned with getting maximum processing power per unit of investment in servers and running costs. “Virtualisation” of server architecture, so that processes are shared, is currently providing an opportunity to maximise server use. Turning off servers that aren’t being used and using server standby functionality also help.

- Appropriate definitions of fault tolerance and availability Users are being encouraged to locate operations which can tolerate lower levels of security in lower tier centres which run more efficiently.

- Increasing data centre efficiency Primary power load is determined by the number and type of servers installed and cannot be affected by data centre designers and operators. Their contribution is to reduce the total data centre power requirement relative to server consumption. This is measured by the PUE. The US Environmental Protection Agency estimates that current data centres typically achieve an operational PUE of 1.9. In the UK, new data centres will typically target a PUE of between 1.5 and 1.2, with the most efficiency designs aiming for 1.15 or lower.

Approaches to achieving energy savings in data centres include:

- Management of airflow in the computer room Most data centres use air to remove the heat generated by the IT equipment. Data centres are normally organised with the IT racks orientated so that all of the equipment sucks in the cold air for cooling from a “cold aisle” and blows out the used hot air into a “hot aisle”. The efficiency of this system is significantly increased by the use of containment strategies to box in either the hot or the cold aisle and limit the mixing of the hot and cold air streams as much as possible.

- Variable speed motors These enable chillers, computer room air-conditioning units (CRACs) and pumps to have their outputs modulated to suit the actual cooling requirements. This provides significant energy savings, not only during part-load operation of the data hall, but also to offset plant over-provision to suit the requirements of tier ratings, and to allow chillers to operate more efficiently when the external temperatures are lower.

- Free cooling chiller Free cooling chillers are not providing “free” cooling but instead have the ability, when the air temperatures are colder, to provide cooling with a very low energy usage without the need to operate energy-hungry refrigerant compressors.

- Adiabatic cooling The evaporation of water can significantly improve the efficiency of the heat rejection process on many different types of heat rejection equipment. Its usage still needs to be carefully considered as the reduction in water use is increasingly being targeted as a global issue on a par with energy use.

- Direct and indirect free cooling Increasingly, systems are being installed that allow the heat from the data hall to be removed directly using air only, without the energy-intensive process of transferring it into and out of chilled water systems. This option is generally not cheap, principally because more conventional heat rejection systems are generally required for back-up and for operation at higher external temperatures.

- High-efficiency uninterruptible power supplies (UPS) The necessary provision of UPS to the data load and frequently the air-conditioning equipment can represent a significant proportion of the energy losses in the system. At low loads, which frequently occur due to the need to run multiple systems to maintain the relevant tier rating, the UPS efficiencies can drop significantly. Correct selection of high-efficiency UPS equipment can significantly reduce this.

- Segregation of plant areas Segregating plant areas with high heat loads from those with low loads, but requiring cooler temperatures, can provide significant energy savings. More efficient systems that can only cool to high temperatures can be used in the high load areas, with the less efficient systems required for cooling to lower temperatures reserved for the lower load areas.

- Challenging internal conditions Historically data centres have been required to operate at fixed temperatures, typically about 22°C ±1°C and 50% relative humidity ±10%. Standards introduced by ASHRAE allow for the data centre to operate within a much wider envelope of 18-27°C. The standards also allow for operation at temperatures above those recommended for short periods, which can assist with improving the efficiency of free cooling solutions.

- Use of common low-energy strategies such as lighting control and low energy lighting, heat reclaim and so on.

Sustainability is not only about energy use - there are also wider environmental concerns to be considered. Increasingly, new-build data centres are registering for environmental assessments such as BREEAM or LEED. This is being driven by local planning requirements, corporate requirements for a more sustainable operation, and the rise in co-location data centres, where the requirements of attracting clients can involve more than just providing a data rack and a power supply.

Water use is also an increasingly important issue. As well as the conventional requirements for humidification, more efficient cooling strategies frequently incorporate evaporation-based cooling.

As data centres use so much energy, they present far greater opportunities to reduce the carbon footprint than many building types and provide very rapid payback on investment. The main barrier to change is the conservatism of IT clients who tend to take a “safety first’ approach to specification and who are often not affected by the energy cost of their security strategies. Closer working by facilities management and IT teams during design will help to develop a successful low-energy strategy.

07/ Capital allowances

Capital allowances in the UK represent huge opportunities for owners or investors to recover some of the

costs of their investment through the tax system.

Between 60% and 80% of the capital value of a data centre may qualify for some form of allowance, and with the enhanced capital allowances (ECA) scheme there are further opportunities to secure 100% first year recovery in energy and water-saving plant.

This could mean a recovery of 12-16% of the costs in tax savings over the building’s life. Many investors are also looking to use the business premises renovation allowance to secure 100% allowances on the

costs of the renovation of an existing out-of-use business premises into a new data centre.

08/ Procurement

The essential issues associated with the procurement of data centres involve the detailed design and co-ordination of the services installation, pre-ordering of major items of plant and thorough testing and commissioning. Investment in time upfront genuinely does reduce the overall duration of projects.

Both co-location and end user projects remain engineering led, and the lead contractor is often a service specialist. The buildings themselves are relatively simple, but the services installation has the potential to be very complex, involving significant buildability and maintainability issues.

Projects are typically let as lump sum contracts to specialist contractors on the basis of an engineer’s fully detailed and sized service design. Increasingly, in recognition that management of a multidisciplinary design teams is challenging, clients are appointing single source multiple discipline professional teams.

Detailed design of the services is essential for co-ordination purposes, which is particularly difficult due to the size of pipework, ductwork and cables feeds involved, and the extent of back-up circuits. The detailed design process will also contribute to the identification and elimination of single points of failure at an early stage.

HV capacity is invariably on the critical path and if either network reinforcement or additional feeds are required, lead-in times of more than 12 months are common in the UK. Early orders ahead of the appointment of the principal contractor are also necessary to accelerate the programme and to secure main plant items such as HV transformers, chillers, CRACs, AHUs and standby generators. Full testing and commissioning is critical to the handover of the project and clients will not permit slippage on testing periods set out in the programme. »

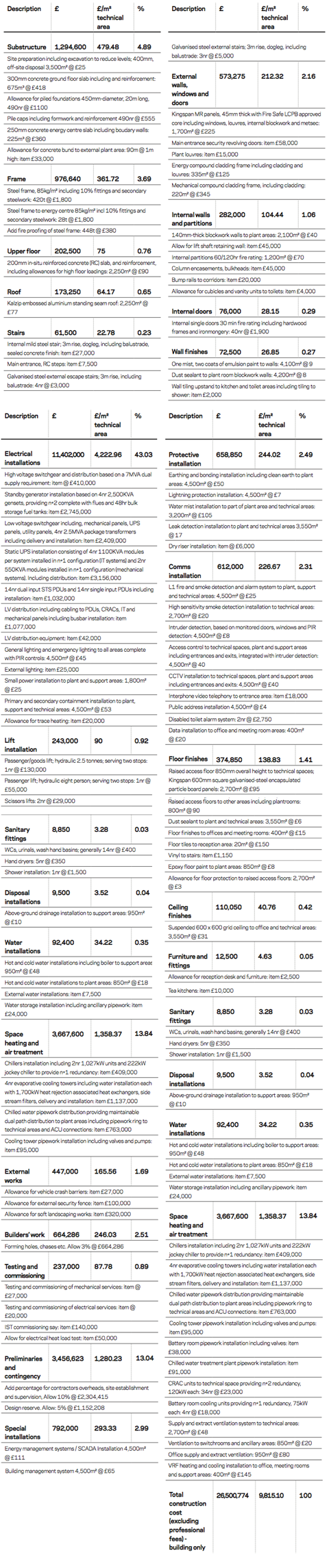

09/ Cost breakdown

The cost model is based on a two-storey, tier 3 4,500m2 warehouse-style development designed to manage power loads of up to 1,500W/m2. The scheme comprises two technical spaces each with a gross internal area of 1,350m2, 850m² of associated internal plant areas, 600m² of external plant deck and 950m² of support facilities.

The electrical infrastructure to the site is via A and B supplies with onsite dedicated substations. Further resilience is provided by uninterruptible power supply and diesel generators at n+1. The technical areas are completed to a category A finish and capable of delivering up to 20kW per rack. Support areas are complete to a cat B finish.

- The m2 rate in the cost breakdown is based on the technical area, not gross floor area.

- The costs of site preparation, external works and external services are included.

- Professional and statutory fees and VAT are not included.

- Costs are given at fourth quarter 2013 price levels, based on a location in north-west England. The pricing level assumes competitive procurement based on a lump-sum tender. Adjustments should be made to account for differences in specification, programme, location and procurement route.

- Because of the high proportion of specialist services installations in data centres, regional adjustment factors should not be applied.

10/ Cost model

1 Readers' comment